Meta's AI Privacy Meltdown: What You Need to Know (And Do) Right Now

June 12, 2025 | CliffinKent.com

Picture this scenario: You're chatting privately with an AI assistant about health concerns. Maybe you're asking about business finances. Or perhaps it's that awkward relationship question you'd never ask a human friend.

Then you discover something shocking. That conversation is now public for the entire internet to see. Even worse, your name is attached.

Welcome to Meta's latest privacy disaster. And it's happening right now.

The Meta AI App: When "Private" Goes Public

Meta's new standalone AI app launched with a troubling feature. It's turning private conversations into public entertainment. Unfortunately, users are accidentally sharing deeply personal AI chats on a public "Discover" feed. Most don't even realize it's happening.

Here's a simple comparison: Imagine if every Google search you made was automatically posted to your Facebook timeline. Furthermore, imagine this happened unless you knew to turn off a hidden setting. That's essentially what's happening here.

The app includes everything from people asking about tax evasion to family legal troubles. Additionally, there's someone recorded asking "Hey, Meta, why do some farts stink more than other farts?" However, the embarrassing stuff is just the tip of the iceberg. Privacy experts have spotted something worse. Users are accidentally sharing screenshots with unredacted home addresses and confidential legal documents. TechCrunch has documented the full scope of this privacy disaster.

How This Privacy Nightmare Happens

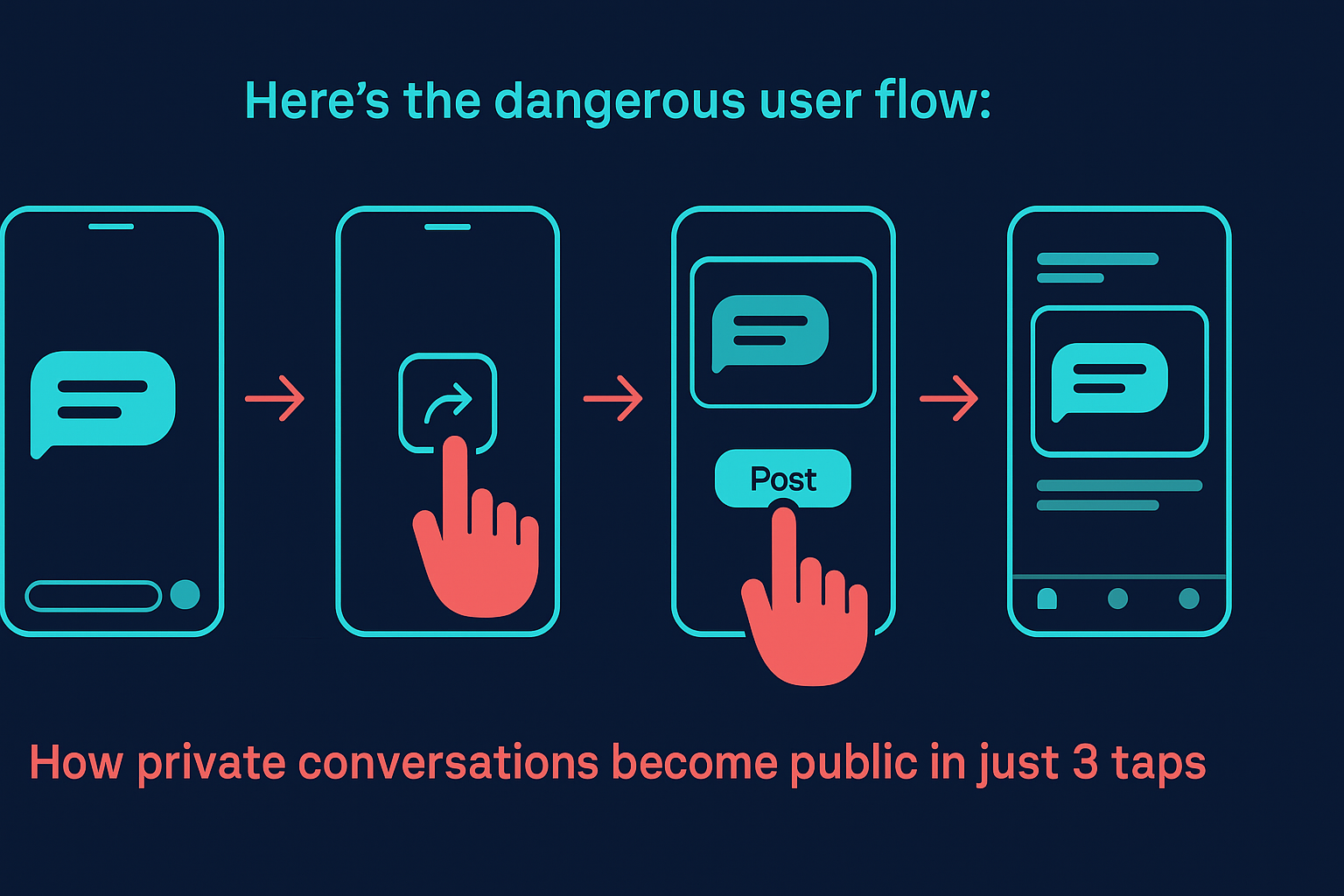

The problem isn't that Meta is forcing people to share. Instead, the app's interface is confusing and provides no clear warnings about privacy. Here's the dangerous user flow:

- You chat with Meta AI about something personal

- You see a "Share" button and think it might save the conversation or share with friends

- You tap "Share," see a preview screen, edit a title, and tap "Post"

- That's it—your private conversation is now public for anyone to see

Moreover, Meta doesn't clearly indicate to users what their privacy settings are as they post. They also don't explain where users are even posting to. If you're logged in through Instagram and your account is public, then your AI conversations become public too.

In contrast, compare this to ChatGPT or Google's Gemini. These platforms handle sharing responsibly by generating private links. You can choose to send these links to specific people. They don't just throw your conversations into a public feed.

The Bigger Privacy Picture: What Else Meta Is Doing

The accidental sharing is just one piece of Meta's expanding AI privacy puzzle. However, there are other moves that should concern anyone who uses Facebook, Instagram, or WhatsApp:

Your Data Is Training Their AI (Whether You Like It or Not)

Meta AI doesn't offer an opt-out for using your chat data to train its AI systems. In comparison, ChatGPT lets you switch off training. Starting May 27, 2025, Meta began using public posts from European users' Facebook and Instagram accounts to train its AI models, as confirmed by Ireland's Data Protection Commission.

For small business owners, this creates a significant concern. Your public posts about your products, services, and business strategies could be feeding into Meta's AI training. Consequently, this might help competitors who use Meta's AI tools.

AI Is Now Reviewing Meta's Privacy Decisions

Here's a plot twist that reads like tech dystopia. Meta is replacing human privacy reviewers with AI systems for up to 90% of product updates across Instagram, WhatsApp, and Facebook. NPR reports that this change has current and former Meta employees concerned.

Current and former Meta employees are worried about this automation. They fear it "comes at the cost of allowing AI to make tricky determinations about how Meta's apps could lead to real world harm." Think about it—the company is using AI to decide whether its AI features are safe for your privacy.

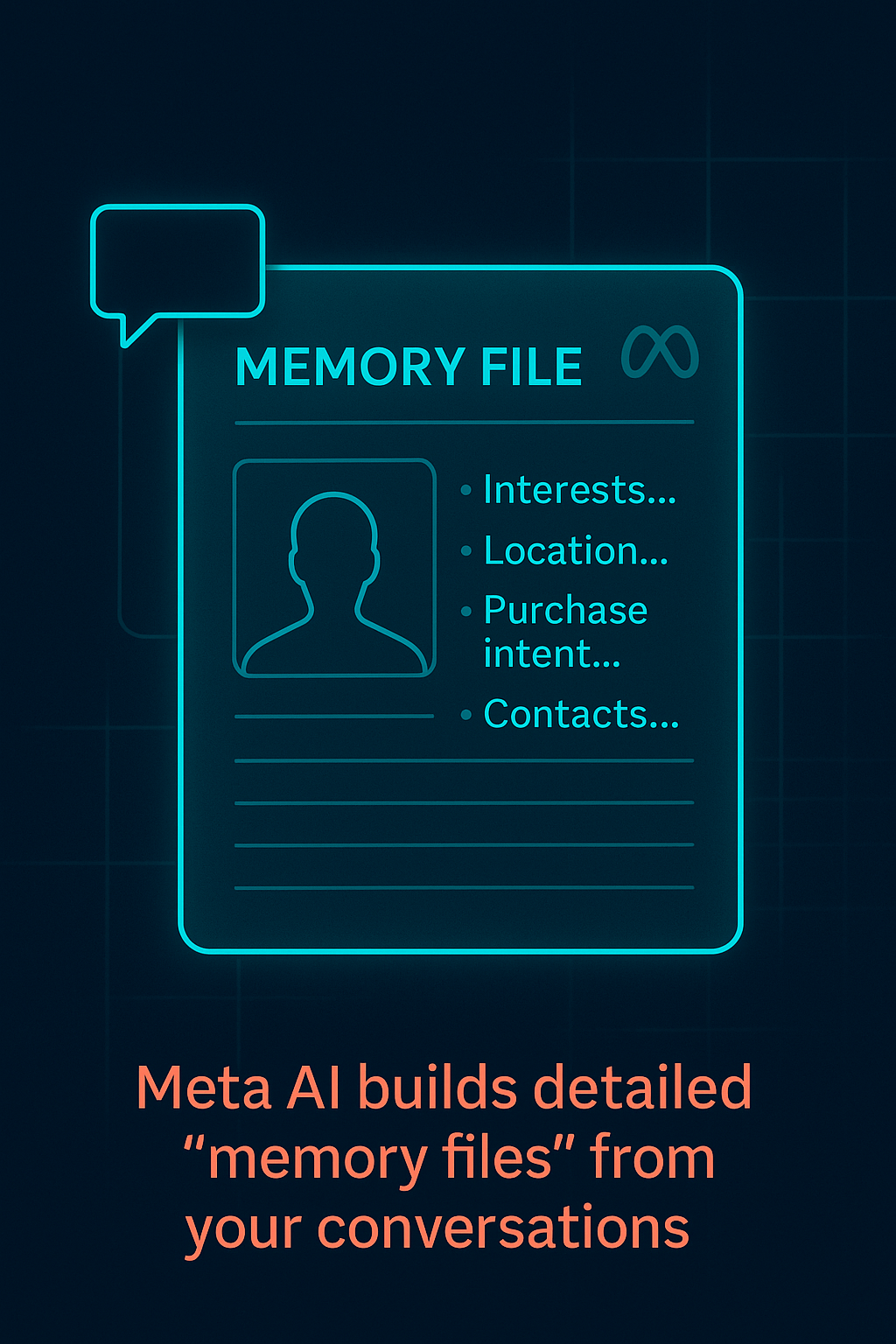

The Memory Problem: Meta AI Remembers Everything

Meta AI builds "memory files" about users. These files store personal details mentioned in conversations to provide more personalized responses. While this might sound helpful, it creates a detailed profile of your interests, concerns, and personal life. Furthermore, this profile persists across all Meta platforms.

During testing, one privacy expert made a concerning discovery. Meta AI had built a memory file including interests in "natural fertility techniques, divorce, child custody, a payday loan and the laws about tax evasion." These were all based on intentionally sensitive test conversations. The Washington Post conducted extensive testing of these privacy implications.

What This Means for You and Your Business

For Individual Users

Your casual AI conversations aren't so casual anymore. That question about your weird rash could end up public. Similarly, your relationship problems might become visible to strangers. Even your financial concerns could become part of a detailed profile. Meanwhile, Meta uses this information for advertising.

For Small Business Owners

This is particularly concerning if you're using Meta AI for business purposes. Specifically, watch out if you're discussing:

- Business planning or strategy discussions

- Customer service scripts or responses

- Financial planning or tax-related questions

- Competitive analysis or market research

Your business intelligence could become Meta's training data. As a result, it might become available to competitors using the same AI tools.

For Content Creators

If you're creating content and using Meta AI for brainstorming, be cautious. The same applies to script writing or creative development. That intellectual property could be feeding back into Meta's systems. Consequently, it might influence future AI outputs for other creators.

How to Protect Yourself Right Now

Immediate Actions for the Meta AI App

If you've used the Meta AI app, take these steps today:

- Check your public posts: First, open the app and tap your profile. Then review what you've shared publicly

- Make past posts private: Next, go to settings and find your shared content. Set it to private

- Turn off prompt suggestions: Finally, in settings, under "Data and privacy > Manage your Information," select "Suggesting prompts" and turn it off

For European Users: Opt Out of AI Training

Europeans can object to their data being used for AI training. Here's how: Click on your account settings. Then select "Privacy centre." Next, find "How Meta uses information for generative AI models and features." Finally, click "Right to object."

General Best Practices

- Create separate accounts: If you must use Meta AI, create a standalone account. Don't connect it to your Facebook or Instagram

- Assume everything is recorded: Remember, Meta AI keeps transcripts or voice recordings of every conversation

- Read before you share: Always review privacy settings and sharing options before using new AI features

- Consider alternatives: Meanwhile, tools like ChatGPT offer more privacy controls and clearer data policies

The Bigger Question: Where Is AI Privacy Headed?

Meta's approach highlights a fundamental tension in AI development. Companies need massive amounts of data to train effective AI systems. However, users deserve clear control over their personal information.

Privacy advocates like NOYB are pushing for opt-in consent rather than opt-out systems. They argue that users should actively choose to contribute their data. Instead, users shouldn't have to know how to stop it. Even Mozilla Foundation has launched a campaign demanding Meta fix the app's design to protect user privacy.

The real test will be whether users vote with their feet. With over 6.5 million downloads since launch, the Meta AI app shows there's demand for AI assistants. But will users stick around once they understand the privacy trade-offs?

The CliffinKent Take

Meta's privacy missteps with AI represent a broader trend we're seeing across the tech industry. They're moving fast and breaking things. Unfortunately, "things" means user privacy. While AI personalization can be genuinely helpful, the current approach feels problematic. It's like giving someone access to your diary and hoping they'll only use it to help you.

The good news? You have more control than Meta makes obvious. The bad news? You have to actively claim that control. Even worse, most people don't know how.

As AI becomes more integrated into our daily lives, understanding these privacy implications isn't just for tech nerds. It's essential for anyone who values control over their personal information and business data.

What's your biggest concern about AI privacy? Have you noticed any unexpected ways your data is being used by AI services? Share your thoughts and experiences—we'd love to hear how you're navigating the privacy-versus-convenience balance in the age of AI.

For more insights on AI tools, privacy, and making technology work for you (not against you), visit CliffinKent.com.